03 Aug The Apple of My AI: The Profound Future Ahead for Behaviour Tech

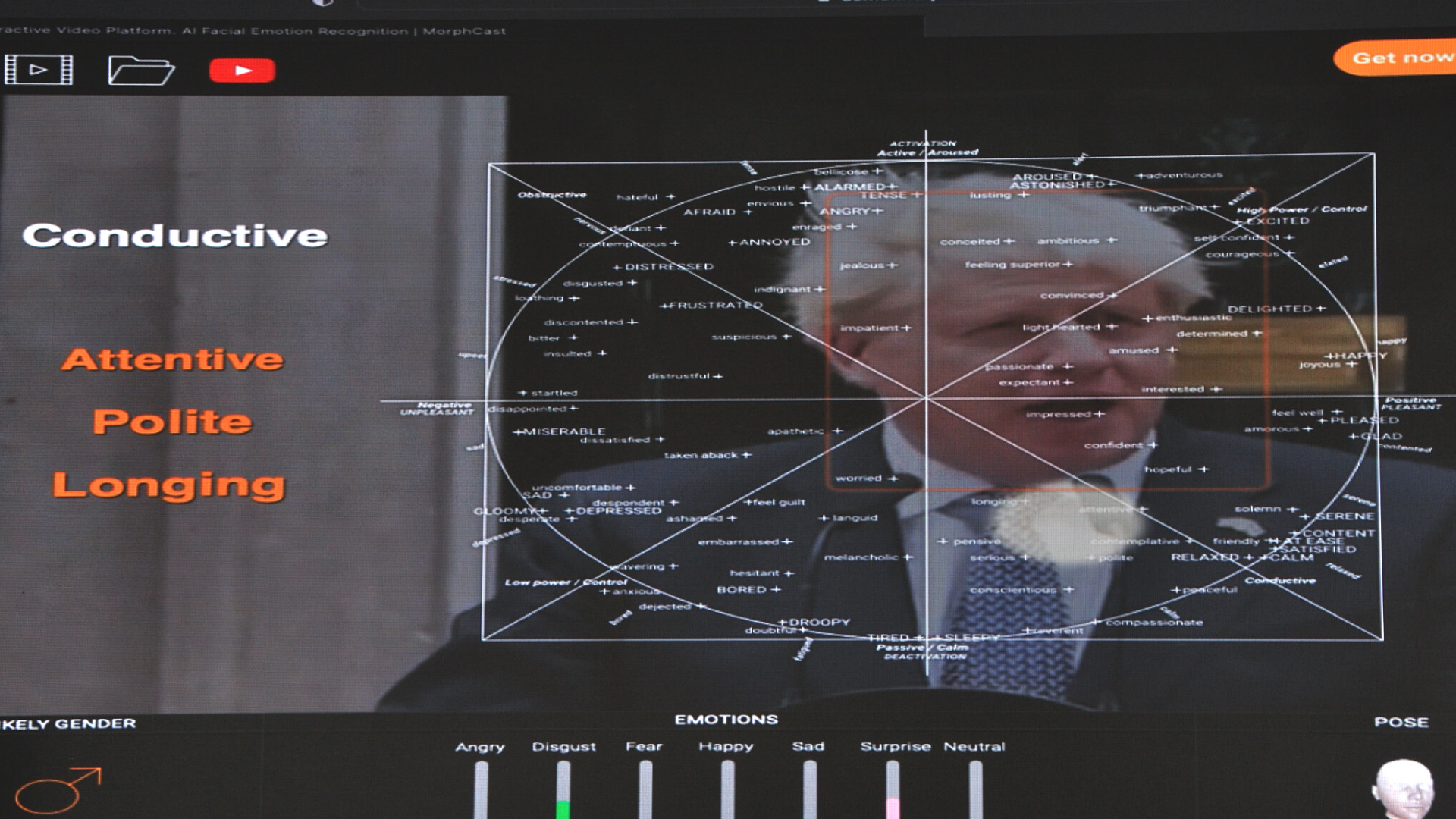

We’re sitting at the Behaviour Tech event watching Yellowspot’s AI program called Sens determine Boris Johnson’s mood as he delivers his resignation speech. He always looked to me like a yellow Orangutan; one that didn’t quite make it to the traditional flaming orange hue due to lack of natural sunlight.

The AI is throwing out words like ‘pensive’ and ‘embarrassed,’ as it reads Johnson’s face. No surprise there. What IS surprising is how accurate the program is analysing the former UK Prime Minister’s obnoxiously pompous and eternally scruffy self. The most common word used to describe Boris Johnson was apparently “liar,” according to a poll for The Times. Can behaviour tech like Sens determine whether someone is lying?

“Certainly,” says Per Lagerstrom, former McKinsey guy (before the scandals, he assures me) and CEO of Yellowspot: “We leak signals all over the place: Through our skin, eyes, speech. Lying is a relatively easy thing to spot. However, that is not our focus. We are working on AI with the ability to interact with the human world.”

Just where does the opportunity in behaviour technology lie? For consumers, it’s that maybe we won’t be privy to incessant, shooting in the dark targeting; we can have a much more personalised experience if a friendly, maybe even sexy avatar smiles at us and tells us to buy this air fryer instead of that one. For venture builders, it’s in the ability to know whether consumers trust the brand. In behaviour tech, trust is a concisely measurable thing.

But would you be able to trust a robot? I asked Per in a past interview whether you could fall in love with one – and along with a whole bunch of study data to the affirmative, his answer was also a resounding yes. The Lagestrom family has a little robot called Vector, who reminded me a lot of Pixar’s loveable robot character WALL-E. With expressive eyes and adorable little grunts and exclamations, Vector is a cutie, and a much-loved and accepted member of the fam.

When I think of trusting an avatar though, I can’t help but think of Blade Runner. In this cult dystopian sci-fi flick, ‘replicants’ that look, talk and bleed just like us want to escape the slavery that humans have wittingly or unwittingly thrust upon them. Humanoids are constantly trying to kill us across many works of fiction. And there is this permeating ethical aspect to this that will inevitably rise to the surface at some point. I mean we are struggling with our own human rights, can you imagine what will happen with humanoids when they meet Trump supporters?

During the presentation, we are introduced to Anna – she is a brunette, with pretty eyes and a soothing voice. She is Jacqui Young’s favourite avatar. Young is co-founder and product lead of Yellowspot, and talks excitedly about the ability to transform efficiency through behaviour tech:

“We are already connected through multiple devices and have ample access to information, but here we can interact on a more personal level. With personalization and personality, we build trust. Within a couple of years, we could have a personalised avatar for 50 million consumers,” she said.

Measuring trust brings favourable outcomes. According to Young, we can currently only determine brand trust 50% of the time with a 30% efficiency: “Is this really the best we can do?! We can start to close this gap through awareness and clever engines,” she said.

Alarmingly, the numbers suggest that humans can’t trust their own instincts. “Humans are logical and sophisticated, and we think forward in a way no other species can. But we are also filled with idiosyncrasies: We make shit up all the time! We are often irrational. The unconscious data in our heads influences 90 to 95% of our behaviour.” Lagerstrom said.

Apparently, when we communicate, we do it using only 30% words, while 70% is what we’re not saying. Enter ‘Deep Listening’ – using AI to read you like a human would, only better, and more precisely, revealing true meaning and true intent.

“Accurate language analysis enables the tech to see and hear, and eventually think and make predictions around certain behaviours. It enables dynamic interactions. It determines how my intended message succinctly and effectively gets across,” said Young.

What does this mean? “It means we don’t need traditional focus groups any more. And no more applauding and considering average lead scores as wins. It’s the low effort, high reward involved that will make everyone’s lives easier. For instance, I don’t want to have to give my ID number a million times to get to the place I’m looking for,” Young said.

Finally, it is demonstrated to us that Lagerstrom, who tends to purchase Apple products above all others, and who frequently lauds the brand when asked about it, actually exhibited a 43% neutrality towards it. It begs the question: Could it be that you may not really be loving your brand as much as you think you do?And if so, can we change your mind, please?

The future prospects of behaviour tech look mighty interesting, amazing even, and span across all industries and verticals. Do you sense the magic, too?